Make Your Data Pipeline Super-Efficient by Unifying Machine Learning and Deep Learning

Santosh Rao

In my previous post, I explained how NetApp and NVIDIA bridge the CPU and GPU universes, enabling you to tackle a wide variety of computing problems more efficiently: NVIDIA GPU computing helps you significantly reduce server sprawl, and NetApp helps you reduce data sprawl by coupling flash, NVMe-oF, and SCM acceleration with intelligent data management, storage efficiency, and tiering. With technologies like the NetApp® Data Fabric, Kubernetes, and the NVIDIA GPU Cloud, the two companies enable seamless use of the hybrid cloud, creating a common architecture and software stack from edge to core to cloud.

In this post, I explore an important example of how this integration works in practice: the unification of data prep, classical machine learning (ML), and deep learning (DL) in artificial intelligence (AI).

Traditional Approaches Separate Data Prep, ML, and DLAs NVIDIA explained in a recent blog post about NVIDIA RAPIDS, data exploration is a key part of data science and AI. To prepare a dataset to train a machine-learning algorithm, first you have to understand the data, then you have to clean up data types and data formats, fill in gaps in the dataset, and engineer features for the learning algorithm. This process is often referred to as “extract, transform, load” (ETL), and it’s usually an iterative, exploratory process. Data scientists may apply a variety of classic machine-learning algorithms, such as singular value decomposition, principle component analysis, or XGBoost as they explore and transform the data. As datasets grow, the interactivity of this process declines.

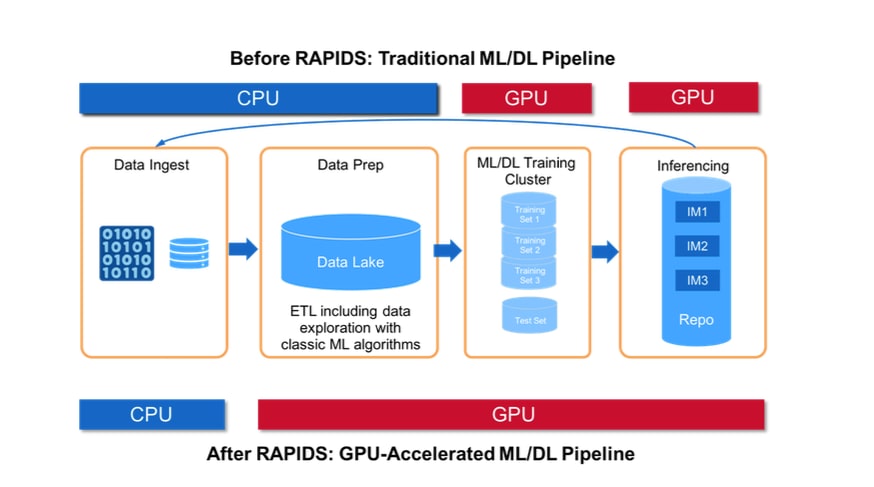

In the usual approach to data science, GPU acceleration is primarily used during deep-learning model training. Most of the data pipeline, including ingest and data prep (that is, ETL including data exploration), is performed using CPUs. Once a model is trained, inferencing may use either CPUs or GPUs.

Processing on CPUs and transitioning formats between CPUs and GPUs is in itself inefficient, reducing the speed-up benefits of GPUs. The process becomes even more inefficient when separate storage is used in each stage of the pipeline—a common situation. Data must be copied from stage to stage, adding time and complexity to the end-to-end process and leaving expensive resources idle. Even if the same storage is shared for data prep and training, the data has to be loaded into CPU servers for data prep and then reloaded into GPU memory for training.

Processing on CPUs and transitioning formats between CPUs and GPUs is in itself inefficient, reducing the speed-up benefits of GPUs. The process becomes even more inefficient when separate storage is used in each stage of the pipeline—a common situation. Data must be copied from stage to stage, adding time and complexity to the end-to-end process and leaving expensive resources idle. Even if the same storage is shared for data prep and training, the data has to be loaded into CPU servers for data prep and then reloaded into GPU memory for training.

NetApp and NVIDIA technologies work together to remove these inefficiencies from the entire pipeline. NVIDIA RAPIDS is open-source software extensions to the CUDA library that incorporate highly parallel GPU technology across the data science pipeline. RAPIDS provide GPU acceleration for data preparation as well as classic machine-learning and deep-learning models.

NetApp technologies accelerate the data science pipeline both with and without RAPIDS. NetApp is the only AI storage vendor that delivers a complete pipeline for big data, machine learning, and deep learning—a pipeline that incorporates the widest variety of data sources on the premises, at the edge, and in the cloud. With NetApp Data Fabric, data flows quickly and easily between locations and is managed with simple, repeatable, and automatable processes. You can integrate diverse, dynamic, and distributed data sources with complete control and protection.

NetApp’s single, unified data lake with in-place analytics eliminates data copies that would otherwise lead to bottlenecks in the data science pipeline. NetApp customers have been benefiting from in-place data access to accelerate all types of analytics for more than 4 years.

Solve Your Pipeline Challenges

Solve Your Pipeline Challenges

The following additional NetApp technologies may have significant benefits in solving specific data science pipeline challenges:

- FabricPool for cold data management. With NetApp FabricPool technology, cold data migrates to object storage automatically based on defined policies, allowing data capacity to grow without limits. You can choose either on-premises object storage or S3-compatible cloud storage.

- NetApp FlexCache® software solves data locality challenges. With FlexCache, data can be cached in physically separate clusters, allowing a data lake to be in a different location than the GPU infrastructure. For example, GPU infrastructure could be close to the data science team, with the data lake in a remote data center.

- NetApp Data Availability Services enables hybrid operations. With NetApp Data Availability Services, a data copy can be made available on a public cloud in native object format, allowing a seamless hybrid cloud ML/DL deployment.

- NetApp FlexClone® thin-cloning technology simplifies data sharing. With FlexClone and QoS, CPU and GPU clusters can share data on the same storage platform with logical isolation and separation of service levels.

The NetApp Data Fabric enables you to pursue your data science objectives in the cloud, on the premises, or using a hybrid approach. If you decide to accelerate your data science efforts on the premises, NetApp ONTAP® AI combines NVIDIA and NetApp technologies in a single platform that eliminates performance issues and enables secure, nondisruptive data access, delivering AI performance at scale.

By combining open-source RAPIDS software and ONTAP AI, you can take advantage of NVIDIA GPU acceleration for all your data exploration, data prep, machine-learning, and deep-learning algorithms, with NetApp AFF A800 all-flash storage providing a single storage pool that delivers maximum I/O bandwidth and minimum latency. The AFF A800 delivers bandwidth up to 25GBps throughput at 500µs latency. A full scale-out cluster with 12 A800 controller pairs delivers up to 300GBps—6 to 9 times better I/O performance than the technologies of others deliver.

With ONTAP AI you can:

With ONTAP AI you can:

- Build an integrated data pipeline from edge to core to cloud, enabling data-driven decisions for better business outcomes.

- Simplify deployment of AI infrastructure to speed innovation with a prevalidated and tested architecture.

- Deliver the performance and scalability your business needs for the most demanding AI and deep-learning applications—as well as other high-performance computing and visualization workloads.

NetApp and NVIDIA are working to create advanced tools that eliminate bottlenecks and accelerate results—results that yield better business decisions, better outcomes, and better products.

NetApp ONTAP AI and NetApp Data Fabric technologies and services can jump-start your company on the path to success. Check out these resources to learn about ONTAP AI:

- Solution Brief: NetApp ONTAP AI

- White Paper: Edge to Core to Cloud Architecture for AI

- NetApp Verified Architecture: NetApp ONTAP AI Powered by NVIDIA

- Customer Story: Cambridge Consultants Breaks Artificial Intelligence Limits

- Is Your IT Infrastructure Ready to Support AI Workflows in Production?

- Accelerate I/O for Your Deep Learning Pipeline

- Addressing AI Data Lifecycle Challenges with Data Fabric

- Choosing an Optimal Filesystem and Data Architecture for Your AI/ML/DL Pipeline

- NVIDIA GTC 2018: New GPUs, Deep Learning, and Data Storage for AI

- Five Advantages of ONTAP AI for AI and Deep Learning

- Deep Dive into ONTAP AI Performance and Sizing

- Bridging the CPU and GPU Universes

Santosh Rao

Santosh Rao is a Senior Technical Director and leads the AI & Data Engineering Full Stack Platform at NetApp. In this role, he is responsible for the technology architecture, execution and overall NetApp AI business.

Santosh previously led the Data ONTAP technology innovation agenda for workloads and solutions ranging from NoSQL, big data, virtualization, enterprise apps and other 2nd and 3rd platform workloads. He has held a number of roles within NetApp and led the original ground up development of clustered ONTAP SAN for NetApp as well as a number of follow-on ONTAP SAN products for data migration, mobility, protection, virtualization, SLO management, app integration and all-flash SAN.

Prior to joining NetApp, Santosh was a Master Technologist for HP and led the development of a number of storage and operating system technologies for HP, including development of their early generation products for a variety of storage and OS technologies.